Building an In-House Observability Platform with a Data Lake (AWS S3 + Apache Iceberg)

Observability – encompassing logs, metrics, and traces – is the nervous system of modern IT operations. But it comes at a cost. As cloud-native applications and microservices proliferate, the volume of telemetry data has exploded. Companies using traditional SaaS observability platforms (Datadog, New Relic, Splunk, etc.) often face skyrocketing bills 💸 and fragmented data silos ⛓️💥. In fact, at large scales (hundreds of GB/day of logs), SaaS tools can cost hundreds of thousands to millions of dollars per year. Beyond cost, relying on vendors means your valuable operational data lives in someone else’s platform, potentially limiting how you can analyze or retain it.

An emerging solution is to build an in-house observability platform backed by a unified data lake – typically using Amazon S3 for cheap, durable storage and Apache Iceberg as the table format for reliable data management. Data lakes have become a necessity for effective observability, serving as a single repository for all telemetry and enabling deep analytics and AI-driven insights not possible with siloed tools. By combining open telemetry standards with an open data architecture, enterprises can finally own their observability data instead of handing it over to a vendor. This blog post explores why an S3 + Iceberg data lake approach can be a game-changer for observability, the benefits and trade-offs to consider, and how to present a compelling case to both technical leaders and executives.

Why Use a Data Lake for Logs, Metrics, and Traces?

Traditional observability stacks often segregate data types: logs in one system, metrics in another, tracing in yet another. This fragmentation makes it hard to correlate issues across different signals and typically requires expensive licenses for each tool. In contrast, a data lake approach centralizes all telemetry data in one place, eliminating data silos. For example, instead of sending logs to a log analytics SaaS and metrics to a time-series database, all data lands in S3 in raw or parquet format, tracked by Iceberg tables. This unification allows powerful cross-domain queries – you can join traces with logs or metrics in a single SQL query – something nearly impossible across disparate SaaS tools.

Cost is a driving factor. Cloud object storage like S3 is extremely cheap compared to vendor retention costs. Storing 1 TB of data on S3 costs on the order of $23 per month (and even less on colder tiers), whereas ingesting that much data into a SaaS platform can cost orders of magnitude more. As one analysis showed, ingesting ~700 GB of logs per day (≈250 TB/year) would incur over $500,000 per year with typical log management tools. Datadog, for instance, might charge ~$0.10 per GB ingested – which sounds low – but about $2.50 per million log events indexed, leading to an annual cost in the millions for full fidelity data. In an S3 + Iceberg data lake, that same 250 TB/year could be stored for a fraction of the cost (on the order of ~$70k/year in S3 storage), and querying it with a tool like Athena (Presto SQL at $5 per TB scanned) might only add tens of thousands more. In short, moving observability data to your own S3-based lake can cut storage and query costs by 50–90% in many cases. This cost efficiency means you can afford to retain full-resolution telemetry for much longer – months or years instead of weeks – without breaking the bank.

Another motivation is flexibility for analytics and AI/ML. A data lake in an open format unlocks your telemetry data for any kind of analysis. You’re not limited to whatever queries or dashboards a vendor provides. Data scientists can run Python notebooks, BI teams can join observability data with business data, and you can apply machine learning to detect anomalies or predict incidents. In fact, achieving maximum observability is increasingly tied to applying AI/ML to large telemetry datasets – something that practically requires a data lake with rich historical data. With S3 + Iceberg, building custom anomaly detectors or feeding data to an AI assistant (like an LLM that can sift through logs) becomes much easier, since all the data is accessible via standard SQL and data science tools.

Finally, data ownership and compliance drive the in-house approach. By keeping observability data in your own AWS account (your S3 buckets), you maintain full control over security, access policies, and compliance requirements. There’s no need to ship potentially sensitive logs (which may contain customer data or secrets) to a third party. In essence, an observability data lake lets you treat telemetry as a first-class data asset that you own, rather than a byproduct you offload to a vendor.

Benefits of a Data Lake Architecture (S3 + Apache Iceberg)

Building your observability platform on S3 and Apache Iceberg comes with a host of advantages:

Unified Storage & Single Source of Truth: All logs, metrics, traces, and even related events are stored together in a central data lake, rather than spread across separate tools. This makes it trivial to correlate across data types – e.g. linking a spike in CPU (metric) with a specific error in logs and the corresponding trace – using one query interface. Engineers and SREs no longer have to swivel-chair between systems, speeding up root cause analysis.

Massive Cost Savings: Object storage is dramatically cheaper than SaaS retention. You pay roughly ~$0.023 per GB-month on S3, versus SaaS platforms that charge by ingestion volume (often $2–$3 per GB ingested or substantial per-host fees). Iceberg allows you to use this cheap storage without sacrificing queryability. Moreover, you avoid vendor “overage” penalties and can use cloud-native cost controls (like S3 lifecycle policies or reserved capacity) for further savings. In short, you can retain more data for longer without budget angst.

Open Format & No Vendor Lock-In: Apache Iceberg is an open-source table format backed by a broad community. Data is stored in open columnar files (Parquet/ORC) on S3 and tracked with Iceberg’s metadata layer. This means your observability data is not locked into any proprietary system – many engines (Spark, Trino, Flink, Presto, etc.) can read it out-of-the-box. By using open ingestion standards like OpenTelemetry and an open storage format, you ensure your data remains portable and accessible. This alleviates the classic concern of being stuck with a single vendor’s tool or paying exorbitant fees to get your own data back later.

Schema Evolution and Flexibility: Observability data schemas change as applications evolve – new log fields appear, metric names change, etc. Apache Iceberg supports in-place schema evolution, so you can add or remove fields without painful re-partitioning or losing historical data. This is critical in a fast-moving DevOps environment where telemetry formats aren’t static. Iceberg tables also handle semi-structured data well (e.g. logs with nested JSON) by storing them in flexible columnar form. You get the best of both worlds: schema when you need it for structured queries, but tolerance for evolving data shapes.

ACID Transactions & Data Integrity: Unlike raw data lakes of old, Iceberg brings database-like reliability to your S3 storage. It provides ACID transactions even as many writers stream data concurrently. For observability, this means you won’t end up with partial or corrupt data in an incident timeline – e.g. a trace’s spans can be committed atomically along with the related logs. Consistency is guaranteed, which is essential when you rely on this data to make critical decisions during outages. Iceberg’s design (immutable files with a transactional metadata layer) was specifically created to solve data lake consistency and “small file” problems at scale.

Performance & SQL Query Power: Modern “lakehouse” tech like Iceberg closes much of the performance gap between a data lake and specialized query engines. Features like hidden partitioning, column statistics for predicate pushdown, and query planning based on metadata allow even huge tables to be queried efficiently. With the right cluster or query service, teams have achieved interactive query speeds on Iceberg that rival dedicated time-series databases – all while using standard SQL and without the cost overhead. In practice, you might use a query engine like Trino or Amazon Athena to get sub-second to seconds response on recent data, and Spark for heavy batch analyses. Furthermore, Iceberg supports time-travel queries (querying data as of a past point in time), which is incredibly useful for comparing today’s system behavior to last week’s or performing incident post-mortems on historical states.

Long-Term Retention & Analytics/ML Friendly: Because of the low storage cost and flexible schema, you can retain raw observability data long-term – far beyond the 30-90 day window many SaaS tools offer. This unlocks rich historical analysis for capacity planning, security forensics, and feeding machine learning models. For example, you might store years of logs and use Spark MLlib or Pandas on a subset to train anomaly detection models specific to your environment. Essentially, your data lake can become the foundation for AI-driven observability, where algorithms mine the trove of data to surface insights that no human would easily spot in real-time streams.

In summary, an S3 + Iceberg observability platform promises centralization, cost efficiency, flexibility, and control. It lets you do more with your data (because you own it and can apply any tools to it) at a lower cost, while leveraging cutting-edge open technology to maintain performance and reliability.

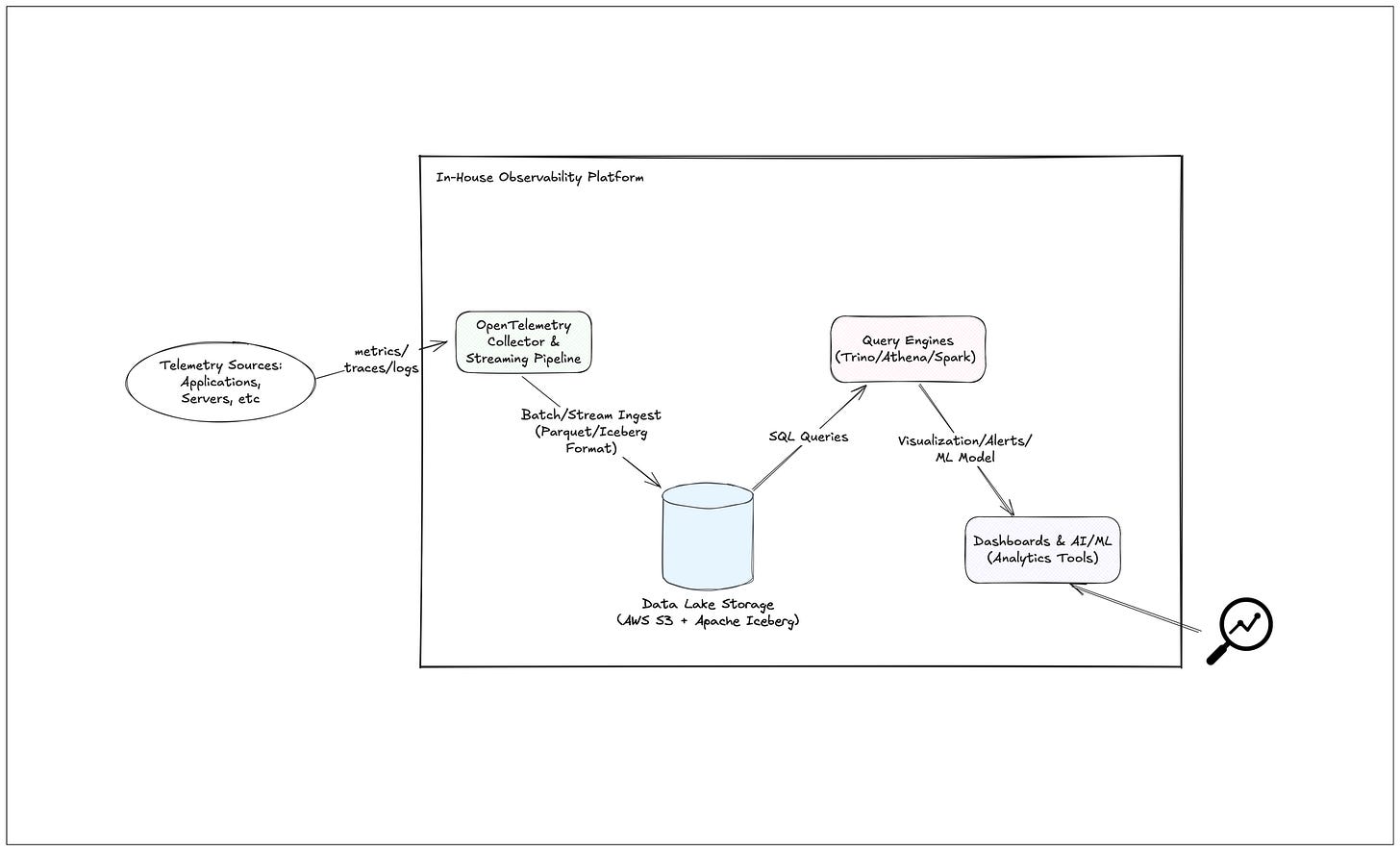

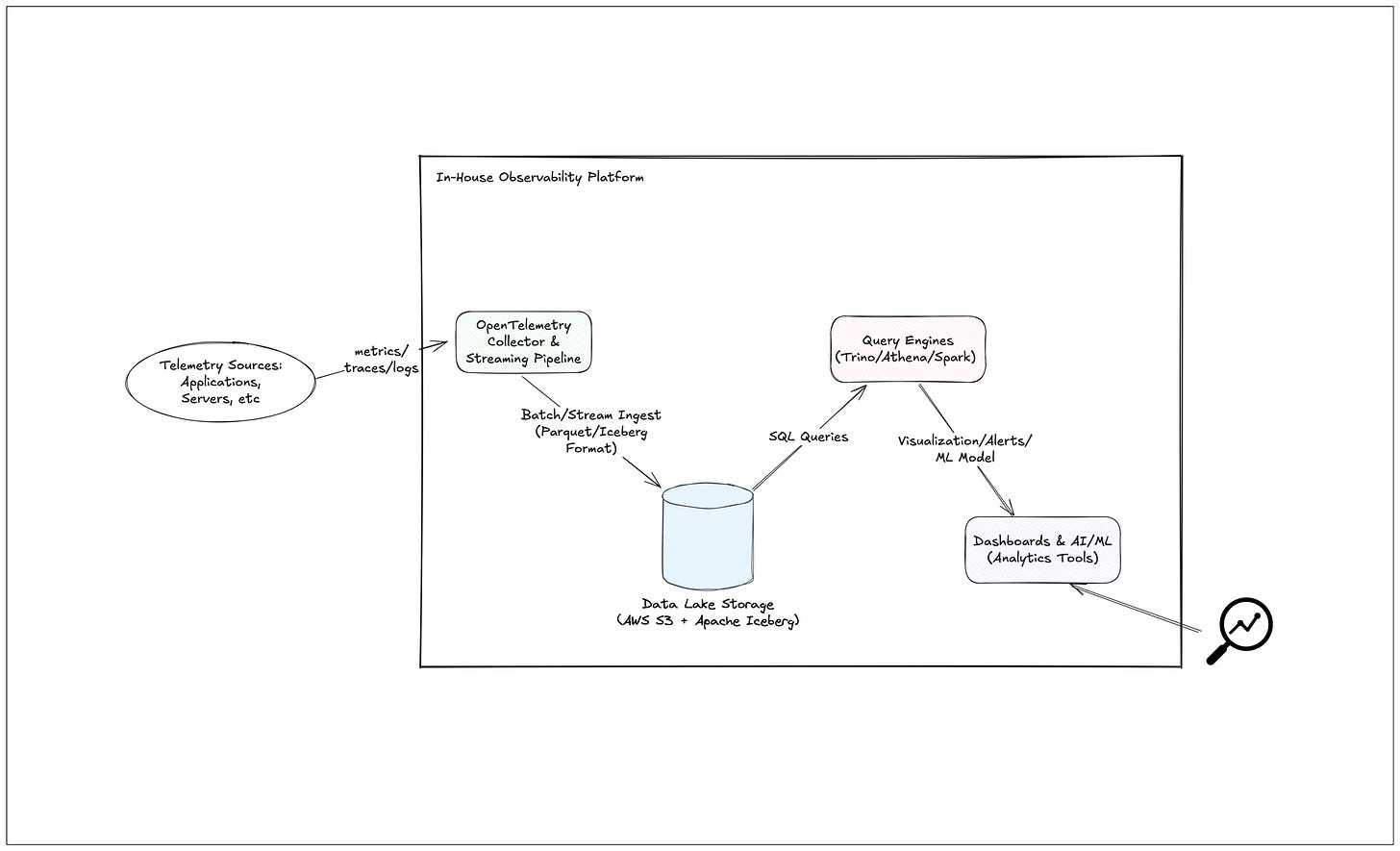

Architecture Overview: From Data Generation to Analytics

In this design, telemetry sources (applications, microservices, hosts, etc.) emit logs, metrics, and traces which are collected by a flexible pipeline (often using the OpenTelemetry Collector or similar agents). Data can be streamed in near-real-time via Kafka, Kinesis Data Firehose, or Fluent Bit, and landed into Amazon S3 in an optimized columnar format (Parquet files partitioned by time). Using Apache Iceberg’s table layer on S3, these raw files are tracked as managed tables with schema and partitions.

Ingesting data might be done through a streaming ETL job – for example, an Apache Flink job reading off Kafka and writing to Iceberg, or simply using AWS Glue batch jobs to regularly compact and organize incoming data. The Iceberg table format ensures each new batch or stream write is transactional and immediately queryable. You might have separate Iceberg tables for logs, metrics, and traces (to optimize each appropriately), or even a single wide table linking all telemetry types (depending on your strategy).

On the query side, various compute engines can be used to analyze the data lake. For ad-hoc queries and dashboards, teams often use SQL engines like Trino (Presto) or Amazon Athena to run fast analytic SQL directly against the Iceberg tables. These engines understand the Iceberg table metadata, so they can read only the relevant partitions and data (for example, scanning just one day of logs, or a specific service’s metrics) to answer a query efficiently. For more complex processing or machine learning, Apache Spark can be used to load large volumes from S3 and perform distributed computations (Spark also has native Iceberg support for reading and writing). The query results can feed into familiar observability tools and visualizations – for instance, you can connect Athena or Trino to Amazon QuickSight or Grafana to create dashboards, or build internal tools that query the data lake for on-demand investigations. Alerts can be defined as SQL queries running on a schedule, detecting patterns in the data lake (e.g. a sudden drop in requests or an error code surge).

This architecture essentially decouples storage and compute: S3 + Iceberg is your durable, scalable storage layer, and you can scale out the compute/query layer as needed (from small on-demand queries to large batch jobs). Importantly, since all data is in one place, analytics that were difficult with siloed SaaS tools become straightforward. For example, a single JOIN across metrics and logs could be done in Trino to correlate a metric anomaly with specific log entries – something that might require exporting data from multiple SaaS tools (if even possible) in the old model. Many organizations are recognizing these benefits; even AWS has introduced services like Amazon Security Lake which “centralizes security data” – a sign that the data lake pattern is the future of broad observability and analytics.

Implementation Example: Storing Observability Data in an Iceberg Table

One of the advantages of an open data architecture is that it uses common technologies and skills (e.g. SQL, Spark) rather than proprietary APIs. As a brief example, here’s how you could store incoming log events into an Iceberg table using PySpark:

# Configure Spark to use an Iceberg Catalog (could be Glue or Hadoop based catalog)

spark.conf.set("spark.sql.catalog.my_iceberg", "org.apache.iceberg.spark.SparkCatalog")

spark.conf.set("spark.sql.catalog.my_iceberg.type", "hadoop") # using Hadoop catalog for simplicity

spark.conf.set("spark.sql.catalog.my_iceberg.warehouse", "s3://my-observability-bucket/iceberg_warehouse")

# Imagine we have a DataFrame of parsed log events with schema (timestamp, level, message, attributes...)

logs_df = ... # obtained from reading a stream or batch of logs

# Write the DataFrame into an Iceberg table (partitioning by date for efficiency)

logs_df.write.format("iceberg") \

.mode("append") \

.partitionBy("date") \

.save("my_iceberg.observability_logs") # Catalog.table identifier

In the above snippet, Spark is configured with an Iceberg catalog pointing to an S3 location. The logs_df (which could have been created by reading JSON log files or via a streaming source) is written in Iceberg format into the observability_logs table, partitioned by date. Under the hood, this creates Parquet files in S3 and maintains Iceberg metadata (in S3 or AWS Glue) about file locations, schema, and snapshots. Once this is done, the new log data is immediately available to query via any Iceberg-compatible engine. For instance, the same data could be queried in Amazon Athena with a SQL statement (SELECT * FROM observability_logs WHERE date='2025-05-15' AND level='ERROR' ...) or via Trino using the Iceberg connector. Metrics and traces can be handled similarly – e.g., metrics might be aggregated into minute-level time buckets and stored in an Iceberg table partitioned by service and date.

The code above illustrates that building on Iceberg doesn’t require reinventing the wheel – you leverage big data frameworks to handle the heavy lifting. The heavy-duty aspects (like small file compaction, partition management, schema handling) are abstracted away by Iceberg’s table format. This means your team can focus on defining what data to collect and how to use it, rather than low-level storage mechanics.

Comparing In-House Data Lake vs. SaaS Observability Platforms

It’s important to acknowledge trade-offs and differences between an in-house data lake approach and traditional SaaS observability solutions:

Cost Structure: SaaS platforms typically charge per host, per GB ingested, or per query – and these costs scale non-linearly at high volumes. As discussed, logs especially become prohibitively expensive at scale (e.g. Datadog could run >$3M/year for ~250TB of logs if fully indexed). The data lake model shifts the cost to cloud infrastructure (storage and on-demand compute). Storage is cheap (S3) and effectively pay-as-you-go, and compute/query costs are usage-based. This often results in an order-of-magnitude reduction in total cost for large environments. However, it also means you incur operational costs directly (which you manage) instead of a flat SaaS bill. An executive-friendly way to frame this: we’re turning a recurring high SaaS fee into a much lower cloud infrastructure cost that we control. Plus, any existing cloud spend commitments (AWS enterprise discounts, credits) now work in your favor.

Performance and Latency: SaaS observability tools are often optimized for very fast queries on recent data (e.g. a Splunk search or Datadog query might return in seconds on indexed data). A data lake, by contrast, trades some latency for cost and flexibility. Scanning data on S3 via SQL might take a few seconds or even tens of seconds for complex queries, whereas an in-memory time-series database might be instantaneous. For many troubleshooting and analysis tasks, a few seconds is acceptable – but for real-time alerting on high-frequency metrics, you might still need to incorporate a real-time processing layer or caching. Combine the two: e.g., ingest into a fast OLAP store (like Apache Pinot) for recent data to achieve sub-second query latencies, while simultaneously archiving all data to Iceberg on S3 for long-term analysis. This hybrid approach ensures alerts and dashboards are snappy, while the data lake serves deeper analytical needs. The key point to convey is that the Iceberg data lake can approximate the performance of specialized stores for many queries, but extremely low-latency needs may require additional architecture considerations. Executives should understand there’s a trade-off: blazing-fast queries vs. cost – and the in-house solution seeks a balance that dramatically lowers cost while keeping query performance reasonable for most purposes.

Feature Richness and Ease of Use: Companies like Datadog and New Relic offer polished UI experiences, out-of-the-box integrations, and turn-key alerting. An in-house data lake will require assembling or building those user-facing components. Engineers will likely use tools like Grafana or custom UIs on top of the data lake for visualization and alerting. While this gives ultimate flexibility (you can design exactly the views and correlations you need), it is an area where initial development effort is higher. Fortunately, the ecosystem is growing – for example, you can plug the data lake into Grafana for dashboards, use SQL-based alerting tools, and leverage open-source front-ends. Still, expect to invest engineering time to achieve the smooth UX of a SaaS platform. On the flip side, your in-house platform can be tailored to your organization’s needs (custom metrics, business context, etc.), whereas SaaS tools are one-size-fits-all.

Data Ownership & Compliance: With SaaS, you are effectively outsourcing data storage and must trust the vendor’s security and compliance. With an in-house S3-based lake, you own the data end-to-end – which can ease compliance audits and allow stricter security controls. For industries with stringent data governance (finance, healthcare), keeping observability data in-house can be a big advantage. Also, if you ever decide to switch tools or vendors, having all data in open formats on S3 means you won’t lose historical telemetry or face costly data migrations. This ownership principle is a significant strategic benefit.

Scalability and Future-Proofing: SaaS platforms abstract away scalability (at a high price), whereas with a DIY data lake you’ll design for scale from the start. The good news is technologies like Iceberg are built for petabyte scale (originating from Netflix’s needs) and can handle massive throughput. S3 will scale virtually infinitely for storage. The challenge is in scaling the ingestion pipeline and query engines – which is solvable with cloud services (Kinesis, auto-scaling clusters, etc.) but needs careful planning. The data lake approach is often called a “lakehouse” when combined with query engines, and it’s quickly becoming the industry standard for big data analytics. In observability, adopting this architecture now is a forward-looking move that aligns with where the industry is headed (as evidenced by several observability startups and even Splunk’s newer architectures embracing S3-backed storage). However, be prepared: building a robust data pipeline and platform does require engineering expertise, and not every organization wants to be in the business of maintaining such a platform. It’s wise to candidly discuss the complexity (and read through this blog series on building high-volume telemetry pipeline). That said, once built, it can serve as a foundation for not just observability, but also security analytics, BI, and more, providing far more value than a black-box SaaS tool.

Presenting the Case to Leadership

Building an in-house observability platform on AWS S3 and Apache Iceberg offers a compelling proposition: drastically lower costs, unlimited data retention, and freedom to innovate on your telemetry data. You’re essentially applying the proven “data lakehouse” pattern to observability – treating log, trace and metric data as you would big data in an analytics platform, rather than as exhaust to be discarded due to cost. For architects and DevOps leaders, the technical benefits (centralization, flexibility, scale) are clear. For executives, the pitch centers on cost savings, strategic data ownership, and enabling advanced insights (like AI/ML) that drive competitive advantage.

It’s important to set expectations: an in-house solution requires engineering investment. You may need a small team to manage data pipelines, optimize queries, and build user interfaces or integrations on top of the data lake. However, this investment can pay for itself quickly with the savings from SaaS fees. Moreover, it future-proofs the organization – you won’t be stuck in a contract if a vendor raises prices, nor will you be constrained by a tool’s capabilities. Instead, you have full control of a core competency: your ability to understand and troubleshoot your systems.

Many forward-thinking companies have already started down this road, blending open-source and cloud services to build their own observability stacks. The tooling ecosystem is maturing rapidly, making it easier than ever (for example, managed services for Kafka, Flink, or using AWS Glue and Athena can reduce the ops burden). Apache Iceberg’s rise as the de facto standard for data lakes means you’re aligning with an industry trend, not venturing out alone.

In closing, building an in-house observability platform with a data lake architecture is about taking control of your telemetry data. It’s about turning what was once a cost center (big bills for third-party services) into an asset (a rich, queryable repository of operational intelligence). By leveraging AWS S3 and Apache Iceberg, you gain scalability and reliability on par with the best data platforms, at a fraction of the cost. The benefits – in cost savings, flexibility, and insights – far outweigh the challenges, especially as tools and best practices in this space continue to evolve. For organizations grappling with high observability costs or data silos, this approach presents a clear path forward to a more sustainable and powerful observability strategy. Happy Laking!