Scaling Observability: Designing a High-Volume Telemetry Pipeline - Part 3

In Part 2, we saw that scaling observability pipelines involves specialized strategies for each telemetry signal type. For metrics, scalable architectures use distributed storage, aggregation, downsampling, etc. to handle high volumes. Traces pipelines employ sampling strategies like head-based, tail-based, and remote sampling to manage trace volume. While for Logs, it makes sense to limit indexing to metadata fields instead of full log line.

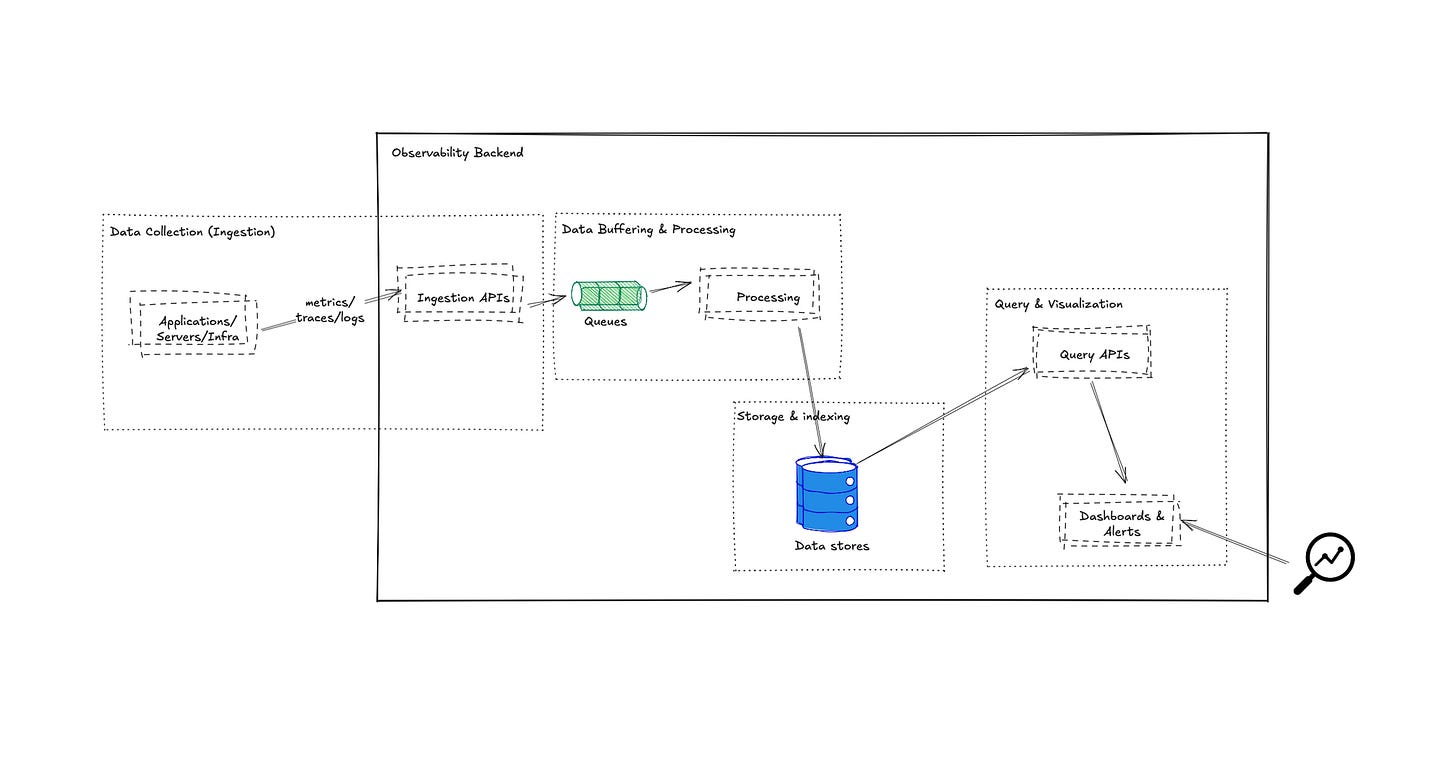

Now, let's assemble these scaled-up telemetry pipelines end to end—covering ingestion, stream processing, and buffering/backpressure strategies.

Telemetry Ingestion at Scale: Collectors, Agents, and Message Buses

The ingestion layer is the foundation of the telemetry pipeline. In a high-volume scenario, how you collect and ingest data can make or break the pipeline. Key considerations include overhead on sources, network efficiency, and ensuring no single choke point.

Agents and Collectors

As mentioned, it’s common to run lightweight agent daemons on each host (or sidecars in each pod) for each type of data: e.g. Fluent Bit for logs, OpenTelemetry Collector (in agent mode) for metrics and traces. The agent’s job is to collect data from local sources (files, OS, app SDKs) and forward it. Using agents is important at scale for a few reasons: it offloads work from the application (e.g. the app just logs to a file, the agent ships it), and it enables batching and compression. Batching multiple telemetry data points together dramatically improves throughput – sending 1000 spans in one payload is far more efficient than each span separately. The OpenTelemetry Collector, for instance, has a batching processor that groups spans or metrics before export. This improves network usage and reduces load on downstream systems.

Moreover, these collectors can do initial preprocessing: add uniform tags (like environment name), scrub sensitive data (drop PII), or enrich events (e.g. add Kubernetes pod labels to all logs and traces). Enrichment is critical in Kubernetes: the agent knows the pod name, namespace, node, etc., so adding those to every log line or span provides context needed later. Doing this at the edge saves expensive joins later.

OpenTelemetry Collector deserves a special mention as a pluggable, vendor-neutral component. It can receive metrics, logs, and traces using various protocols, apply processors (filtering, sampling, aggregation), and export to various backends. At scale, you might deploy it in a two-tier mode: one collector on each node (agent) and a pool of collectors as a central service (gateway). The agents feed into the gateway cluster, which then feeds storage. This two-tier approach isolates concerns and allows scaling the middle tier separately.

Decoupling with Message Queues

To achieve resilience and smoothing of traffic, inserting a durable message queue/broker in the pipeline is a common strategy. Apache Kafka is a popular choice due to its high throughput and durability. Kafka can handle millions of messages per second if appropriately provisioned (with enough broker nodes and partitions). By funneling telemetry through Kafka topics (e.g. a topic for logs, one for traces, etc.), you ensure that temporary spikes or downstream outages don’t cause data loss – data will queue in Kafka (up to retention limits) until consumers catch up. It also lets you fan-out consumers: for instance, one consumer group could feed data to your primary storage, another consumer group could drive a real-time analytics job in parallel, all reading from the same stream of data.

In practice, using Kafka adds operational complexity (managing a Kafka cluster) and some latency (usually a few seconds), but it significantly decouples components. Fluent Bit -> Kafka -> Elasticsearch is more robust than Fluent Bit -> Elasticsearch directly, because if Elasticsearch slows down, Kafka can buffer whereas in direct ingestion you’d start dropping logs or backpressure into Fluent Bit. Many observability systems (Datadog, Splunk, etc.) decouple ingestion in a similar way internally, even if it’s not exposed.

Other queue technologies such as RabbitMQ or NATS could be used for smaller-scale or low-latency needs, but Kafka is the go-to for big data streaming. Some organizations even use Redis as a short buffer (e.g. Redis lists as a queue for metrics) when they want simplicity and are okay with data in memory/disk for short term. Custom in-memory queues might be built for ultra-low latency requirements (but then you risk losing data on crash, unless you persist to disk like Kafka does). Generally, Kafka’s combination of throughput and durability is hard to beat for telemetry pipelines.

Protocol Choices

It’s worth noting the protocols used: OpenTelemetry uses gRPC-based OTLP by default for sending data which is efficient (binary, compressed). Fluent Bit might use Fluent protocol or just write to Kafka. Some use HTTP for simplicity (like Prometheus remote write uses HTTP); at very high scale, gRPC or Kafka’s binary protocol is preferred for efficiency. The pipeline should avoid overly chatty or text-based protocols (e.g. older syslog over TCP might not cut it for huge volumes without tweaking).

Ordering and Grouping

If you require ordering (like logs in exact order per source) or grouping (all spans of trace together for tail sampling), design the ingestion to respect that. Kafka can maintain order per partition key, so choosing the key is important. For logs, you might key by a combination of source and time bucket to keep relative ordering in partitions. For traces, key by traceID ensures all spans of that trace land in one partition (and thus one consumer at a time). This aids tail sampling as discussed.

Overhead on Sources

High-volume pipelines must ensure they don’t hurt the applications. Agents should be lightweight – e.g., Fluent Bit’s footprint is small (tens of MB of memory). If using sidecar containers for collection, ensure CPU/memory requests are set appropriately to not starve the app container. One anti-pattern is putting too much work on application threads (like heavy log formatting or synchronous exports) – avoid that by shifting it to the agent. Also, consider rate limiting at the source if absolutely needed. For example, if an app suddenly floods 10x normal logs due to a bug, the agent could detect this and sample those logs rather than bringing down the whole pipeline.

Security and Multi-tenancy

In hybrid scenarios, data might traverse networks. Use encryption (TLS) on telemetry data in motion if crossing datacenter boundaries. Agents might authenticate to gateways. Multi-tenant pipelines tag data with tenant identifiers and ensure isolation down the line (some backends natively support multi-tenancy, e.g. Cortex/Mimir for metrics segregate data by tenant). Kafka can even have separate topics per tenant if needed, to isolate workloads and data access.

In essence, the ingestion layer must be robust, scalable, and smart: robust by not losing data during spikes (hence buffering), scalable by parallelizing inputs and using horizontal clusters, and smart by doing early drop/filter of useless data. This provides a solid feed of telemetry into the rest of the pipeline. Next, we consider if and how to do real-time processing on telemetry streams before final storage.

Real-Time Stream Processing and Enrichment

One powerful aspect of a modern telemetry pipeline is the ability to perform real-time computations on the data in transit. Instead of just shipping raw data from A to B, organizations often insert stream processing jobs that derive insights, detect anomalies, or enrich data on the fly. At high volume, this requires distributed stream processing engines such as Apache Flink or Apache Spark, which are designed to handle large event streams with stateful computations.

Use Cases for Streaming in Observability

Enrichment: Join the telemetry data with other data sources to add context. For example, augment logs with cloud metadata (like looking up an instance ID to get its region or owner), or attach application version info to traces by joining on service name. While some enrichment can be done at the agent, complex ones (like a database lookup or ML model inference) might be done in a stream processor.

Anomaly Detection and Alerts: Instead of waiting for metrics to be stored and then evaluated, a Flink job could continuously compute statistical measures or run anomaly detection algorithms (like z-scores, or even train models) on the metrics stream. This could identify unusual patterns (error rates spike, memory leak patterns in metrics) in real-time and trigger alerts or flag traces. Flink is well-suited for such event-driven applications, providing large state capacity and event-time processing for complex patterns. Typical examples are fraud detection, anomaly detection, and rule-based alerting on streams.

Metric Extraction: Some logs contain metrics that aren’t emitted elsewhere (e.g. an application might log a line “computed recommendation in 123ms”). A streaming job can parse logs and emit a custom metric (like a histogram of that latency) into the metrics system, effectively turning unstructured logs into structured metrics for easier monitoring. This can be done with tools like Logstash or Vector as well, but Flink/Spark can do it at larger scale with more complex logic.

Log Pattern Aggregation: Rather than storing millions of identical log lines, a streaming job could group them. For example, detect that an error message repeated 100,000 times in an hour and instead store one representative log with a count. Edge computing solutions or pipeline products often do “log pattern detection” upstream to reduce data. This is a form of pre-aggregation of logs that can cut down ingest load massively.

Correlating Signals: Join different telemetry streams. For instance, join traces with logs – if you have trace IDs in logs, a Flink job could take error logs and try to find the corresponding trace, then push an alert or an enriched event (like “this error log is part of trace X which had 5 other errors”). This kind of correlation can also populate a troubleshooting knowledge base or trigger automated mitigations. Another example: correlating metrics with traces (e.g. if latency metric spikes, automatically pull sample traces from that window).

Implementing these at scale requires an engine like Flink that can handle high event rates and maintain state with exactly-once guarantees. Apache Flink is a popular choice because it’s designed for long-running streaming jobs with strong consistency, and it can handle state keyed by event attributes (for aggregation or joins) efficiently. It can manage very large state and exactly-once processing, making it suitable for heavy streaming tasks like anomaly detection or real-time metrics computation. Apache Spark Streaming is another, though it traditionally micro-batches data (with very small batches) and may have slightly higher latencies; still, it can process large throughput and integrates with the Spark ecosystem for machine learning models, etc.

Imagine a pipeline where all logs go to Kafka. A Flink job reads the logs, does a regex to categorize error messages, and maintains a count per error pattern per service per minute. It then emits a metric (service, error_pattern -> count) into a metrics backend or sends an alert if the count exceeds a threshold. At the same time, the raw logs also flow to storage (maybe Loki). In this way, you’ve offloaded counting and alerting from the heavy log search later – operators get an alert “Error X occurred 5000 times in service Y in 1 minute” immediately, with perhaps a link to one example log or trace. This is much faster than waiting to notice in a dashboard or manually search logs, and it reduced the problem to analyzing aggregated metrics rather than countless log lines.

Placement in Pipeline

Streaming processors usually subscribe to the message bus (Kafka topics) where telemetry flows. For example, you can have a Kafka consumer in Flink reading the “logs” topic, performing transformations, and then outputting to another Kafka topic or directly to storage/alerts. Some systems use event streaming frameworks like Apache Pulsar or AWS Kinesis similarly to Kafka – Flink can consume from those as well. If using an agent-to-collector direct pipeline without Kafka, you can still fork data to a stream processor via a collector that has multiple exporters (one to storage, one to an analytics service).

One must be mindful that adding streaming jobs can add processing load and possibly latency. If the requirement is real-time (<1s) detection, Flink can achieve sub-second latency with tuned pipelines, but typically expect a couple seconds end-to-end. For many monitoring cases (which often tolerate a few seconds delay), this is fine.

Resource Considerations

Running Flink at scale means provisioning a Flink cluster with enough parallel tasks to handle the input rate. You partition the stream (say by key) and Flink parallelism maps to Kafka partitions usually. This needs maintenance and monitoring itself (metrics for job lag, checkpoint duration, etc., which ironically you might feed into the same telemetry pipeline!). It’s advanced but powerful. If adopting this, ensure backpressure in the Flink job doesn’t block the main pipeline. Ideally, because Kafka decouples, if Flink is slow the Kafka topic will accumulate some lag, but that’s okay as long as within retention. You would alert on the lag and scale Flink or investigate.

In conclusion, real-time stream processing is an optional but valuable component in a high-volume telemetry pipeline for deriving immediate insights and reducing data. It leverages big data stream processors (Flink, Spark) similarly to how one would in other big data domains. The main caution is complexity – operating these systems and writing stream processing jobs requires expertise, and one must always weigh if the benefit (e.g. slightly faster detection, lower storage usage) is worth the operational cost. Many large organizations find that for certain use cases (like anti-fraud, or automated incident response), streaming analytics in the observability pipeline is indeed worth it.

Queuing, Buffering, and Backpressure Management

In any high-volume pipeline, backpressure – what happens when one part of the system can’t keep up with the incoming data – is a critical aspect. A well-designed telemetry pipeline should handle temporary slowdowns gracefully via queuing and buffering, rather than crashing or blocking upstream components.

We’ve already touched on the primary buffering mechanism: persistent queues like Kafka. By writing telemetry events to Kafka (with sufficient retention and disk space), you create a durable buffer. If, say, the log indexing service goes down for a bit, the logs pile up in Kafka and can be consumed later once it’s back (albeit with increased latency). Kafka decouples throughput differences: producers can continue at their own pace, and consumers process as fast as they can, with Kafka’s logs absorbing the difference. Kafka’s design of persistent log with sequential writes allows it to handle high volumes with durability – that’s why it’s widely used for backpressure handling. In essence, adding a queue means trading memory for time: you use storage (memory/disk in the queue) to hold data until the system can catch up, instead of dropping data or exerting backpressure on producers.

In-memory vs On-disk Buffers

On smaller scales or within components, in-memory buffers are used. For example, the OpenTelemetry Collector has a queue (by default, bounded memory) for each exporter pipeline. If the backend is slow, the collector’s queue starts filling. Once it hits a high-water mark, the collector can either drop data or apply backpressure upstream. The OTel Collector also has a memory limiter that can trigger once memory is near capacity, which will start shedding load (dropping new data) to protect the process. Some components (like Fluentd) use file buffering – Fluentd can spill to disk so that it doesn’t drop logs if the output is unavailable; it will retry later. This is a form of backpressure absorption (disk is the buffer). The drawback is if the outage is long or throughput is very high, buffers can exhaust (disk fills up, memory exhausted). Thus sizing buffers and setting retention limits is important (e.g., keep at most 1 hour of data buffered, after which start dropping oldest).

Backpressure vs Dropping

There are two ways when saturated: exert backpressure upstream (i.e., make producers slow down) or drop data. In observability, dropping data is often acceptable (missing some telemetry might be better than causing an outage of the app). For metrics, losing a couple data points usually just shows as a gap. For logs and traces, dropping means you lose some insight but the system stays up. On the other hand, if you propagate backpressure to the applications (e.g. a trace SDK blocking a request thread because the exporter queue is full), that can impact production traffic – usually not acceptable. Therefore, telemetry pipelines prefer an “eventually drop” strategy under extreme overload rather than slowing the source. This is why many telemetry systems implement dropping policies: e.g. “if my span queue is full, start sampling more aggressively (dropping spans)”. An example is an OpenTelemetry Collector under backpressure will shed load by dropping new spans to ensure it doesn’t OOM. It’s a deliberate trade-off: you lose visibility rather than risk system stability.

However, a degree of backpressure signalling can be useful in less critical parts. For example, Fluent Bit might intentionally not acknowledge log reads if the forwarder is behind, causing a container runtime’s log driver to apply backpressure (preventing log file from growing infinitely). This might slow the application’s logging (which typically is non-blocking anyway if writing to a file). Or Kafka producers can be configured with acks=all and limited in-flight requests, so if brokers are slow, the producers will wait – this effectively paces the ingestion to what the cluster can handle. This is fine as long as it doesn’t directly impact end-user response times (it might just mean logs are slightly delayed).

Horizontal Scaling to Avoid Backpressure

The primary solution to backpressure is to scale out the bottleneck. If your Kafka consumer (log indexer) can’t keep up, add more consumers (and likely more Kafka partitions to parallelize). If one Elasticsearch node is maxed on ingest, add more nodes (shards) to split load. Essentially every stage should be scalable so that the system can adapt to higher throughput rather than just queue forever. Monitoring plays a role: you should watch queue lengths and consumer lags. For example, monitor Kafka lag – if it’s growing consistently, that’s a red flag that consumption can’t keep up with production. Similarly, monitor the CPU of your ingesters or memory of your collectors to spot bottlenecks early.

Backpressure in Streaming Jobs

If you use streaming processors like Flink, they have built-in backpressure signaling – if a sink is slow, it will propagate back to slow down ingestion reading from Kafka. This means data accumulates in Kafka (as intended). Flink metrics will show this as increasing checkpoint durations or source idle time. You’d then act by scaling the Flink job or fixing the slow sink.

Graceful Degradation

Some pipelines implement graceful degradation under extreme load. For instance, dynamically raise the sampling rate (reduce trace volume) if the tracing backend is falling behind, or drop all debug-level logs when the system is in “overload mode”. These require feedback mechanisms and are complex but can save the day. A simpler version is having hard caps – e.g., if the logging rate exceeds X MB/s, start dropping non-critical logs.

Suppose a spike occurs and your log volume doubles for 5 minutes (maybe a bug causing a flood of warnings). Your pipeline: Fluent Bit -> Kafka -> Elasticsearch. What happens? Fluent Bit will push to Kafka as fast as it can. Kafka brokers, if not sized for this, might start to see high disk IO. But likely they handle it by writing to disk (using available retention headroom). The consumers reading into Elasticsearch might fall behind because Elasticsearch can index at only the original rate. Kafka’s lag grows. After the 5 minutes, log rate returns to normal. Now Elasticsearch consumers continue to work through the backlog (maybe for the next 30 minutes) until the lag returns to zero. No data was lost, just delayed. If another spike comes and the lag hasn’t cleared, you might accumulate more. If Kafka’s retention (say 1 day or a set byte size) is exceeded, then oldest messages (which might not be processed yet) will start dropping. That sets an upper bound on how much burst you can handle without loss.

From above example, key backpressure management points: sizing (Kafka had enough spare capacity), monitoring (know your lag), and possibly alerts (if lag > certain threshold, alert to scale or investigate).

In scenarios without Kafka, say OTel Collector direct to backend, you’d rely on its internal queue. If backend is slow, the queue fills and then it starts dropping. That’s fine if brief, but if persistent, you’ll lose data. Therefore, many prefer an external queue for anything mission-critical.

In short, buffering and backpressure management ensure your pipeline remains robust under sudden load spikes or downstream slowness. Use persistent queues for big stages, tune in-memory buffers for smaller ones, and prefer dropping telemetry data over causing application interference when push comes to shove. And always keep an eye on those queues!

In Part 4, we’ll address how to make the entire pipeline horizontally scalable and highly available, explore cost management and retention policies, and examine lessons learned from production deployments. Happy Flinking!